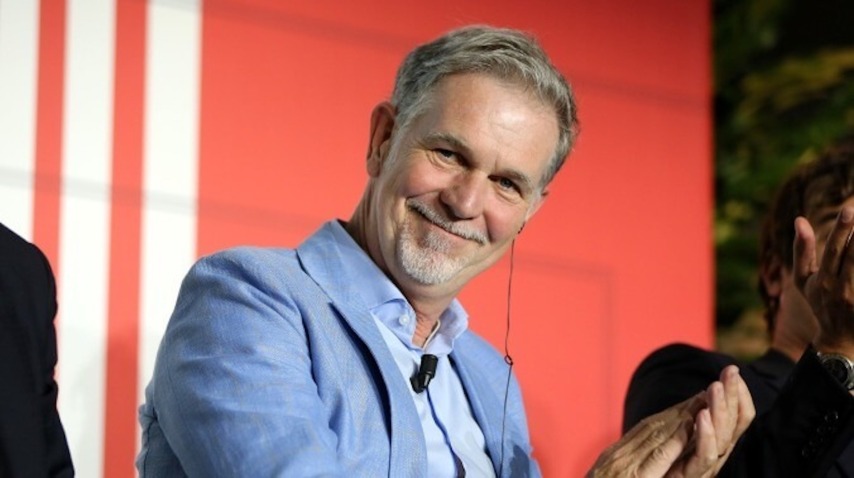

Netflix cofounder Reed Hastings to "help humanity progress" with AI board seat

Hastings has been appointed to the board of Anthropic, which runs the popular Claude LLM.

Photo: Ernesto S. Ruscio/Getty Images/Netflix

It’s a good thing Netflix cofounder Reed Hastings doesn’t work at the streaming service anymore, because the fight to keep thousands of copyrighted works away from the clutches of AI may have gotten that much harder. Hastings—who departed Netflix as co-CEO in 2023 and chairman earlier this year—is joining the board of major AI firm Anthropic, per The Hollywood Reporter. “The Long Term Benefit Trust appointed Reed because his impressive leadership experience, deep philanthropic work, and commitment to addressing AI’s societal challenges makes him uniquely qualified to guide Anthropic at this critical juncture in AI development,” Buddy Shah, chair of Anthropic’s Long Term Benefit Trust, wrote in a statement.

Keep scrolling for more great stories.

Keep scrolling for more great stories.