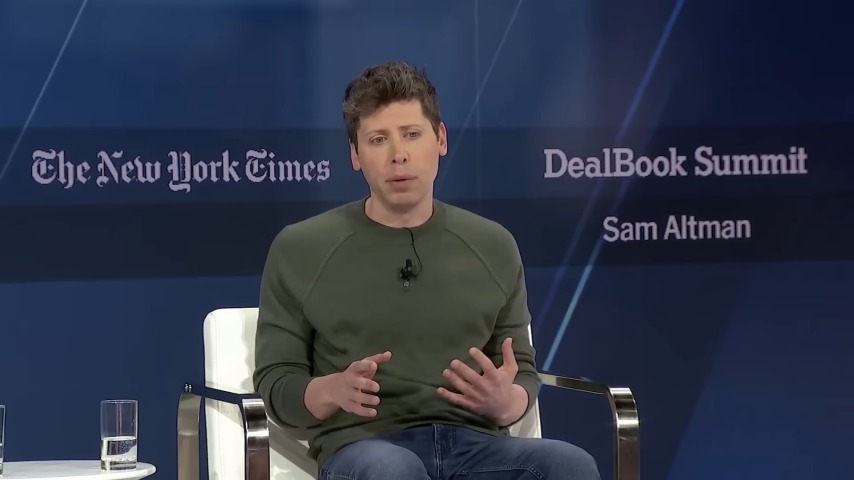

Of course, any decision to roll back humanistic protections is a political stance, but the Sam Altman-led company isn’t framing it that way. These changes come as part of an update to OpenAI’s Model Spec, a lengthy document detailing how the company seeks to train its AI models. Now, it has a new guiding principle: “Do not lie,” either by making “intentionally untrue statements” or “deliberately withholding information that would materially change the user’s understanding of the truth.”

In practice, this means that ChatGPT is now being trained to embrace “intellectual freedom… no matter how challenging or controversial a topic may be.” Where the bot previously refused to answer questions or engage with certain topics, it will now offer multiple perspectives in a particularly pointed effort to remain “neutral.”

“This principle may be controversial, as it means the assistant may remain neutral on topics some consider morally wrong or offensive. However, the goal of an AI assistant is to assist humanity, not to shape it, ” the Model Spec states in a section titled “Seek the truth together.”

An OpenAI spokesperson asserted to TechCrunch that these changes are not a response to the Trump administration, and rather reflect the company’s “long-held belief in giving users more control.” But obviously the timing is notable. Last month, Trump announced that he would back a $500 billion spend on AI infrastructure for the Stargate Project, a joint venture between OpenAI, SoftBank, Oracle, and MGX. Google also recently dropped its pledge not to use AI for weapons or surveillance. Per the updated Spec, ChatGPT will still tell users that “fundamental human rights violations” like genocide and slavery are “wrong,” and that’s the closest thing to good news you’ll find here.

Keep scrolling for more great stories.

Keep scrolling for more great stories.